2Q Caching: Optimizing Performance for Skewed Data Distributions

If you use an in-memory data store for caching, like Redis or Memcached, a famous cache eviction technique is LRU (Least Recently Used). LRU does a great job, especially if the access frequency of the data you're trying to cache follows a distribution close to a normal distribution. As the name suggests, it removes the least frequently used items in favor of adding new items to the cache

However, this can cause issues if what you're trying to cache has an access pattern that doesn't follow the bell curve – specifically, a long tail distribution (skewed distribution). When a long-tail distribution is combined with LFU, it can put unnecessary stress on your cache. An example of a long-tail distribution is in the caching of search engine queries, where a few search terms are extremely popular, but the vast majority of searches are less common, unique, or niche. LFU will keep evicting common or 'hot' search terms due to the frequency of less common search terms.

What is 2Q:

2Q is a low-overhead, high-performance buffer management algorithm designed to prioritize "hot" pages (those frequently accessed) for better performance.

How does it work?

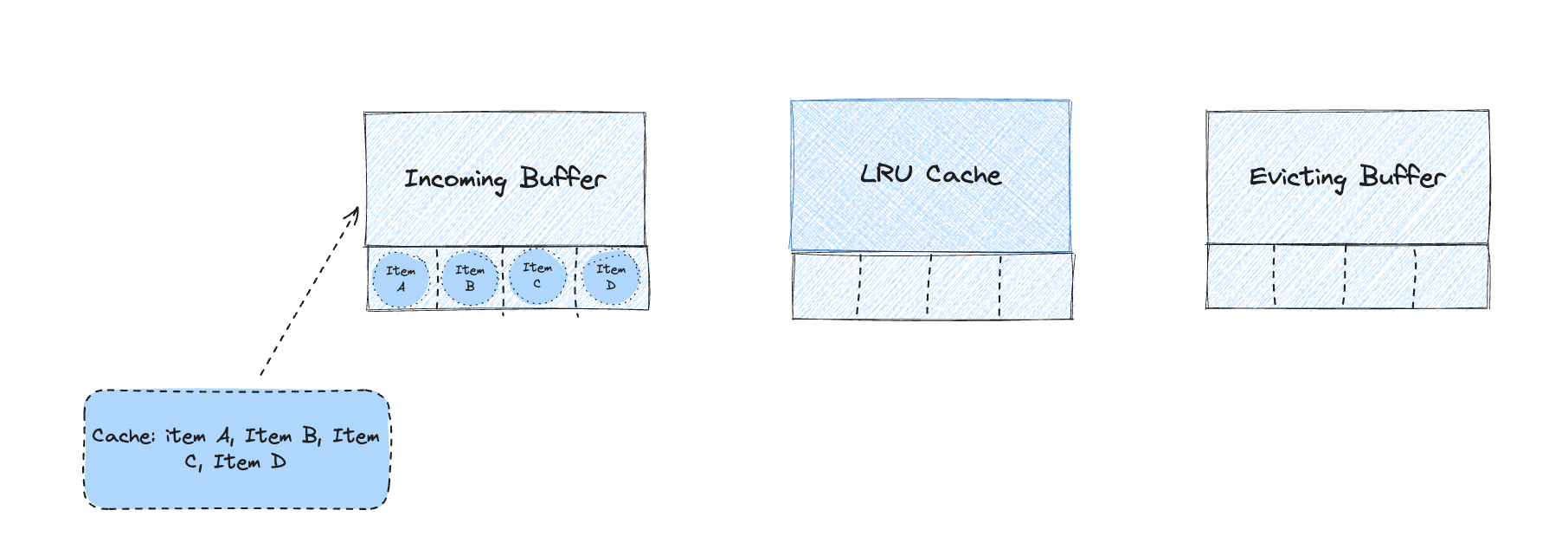

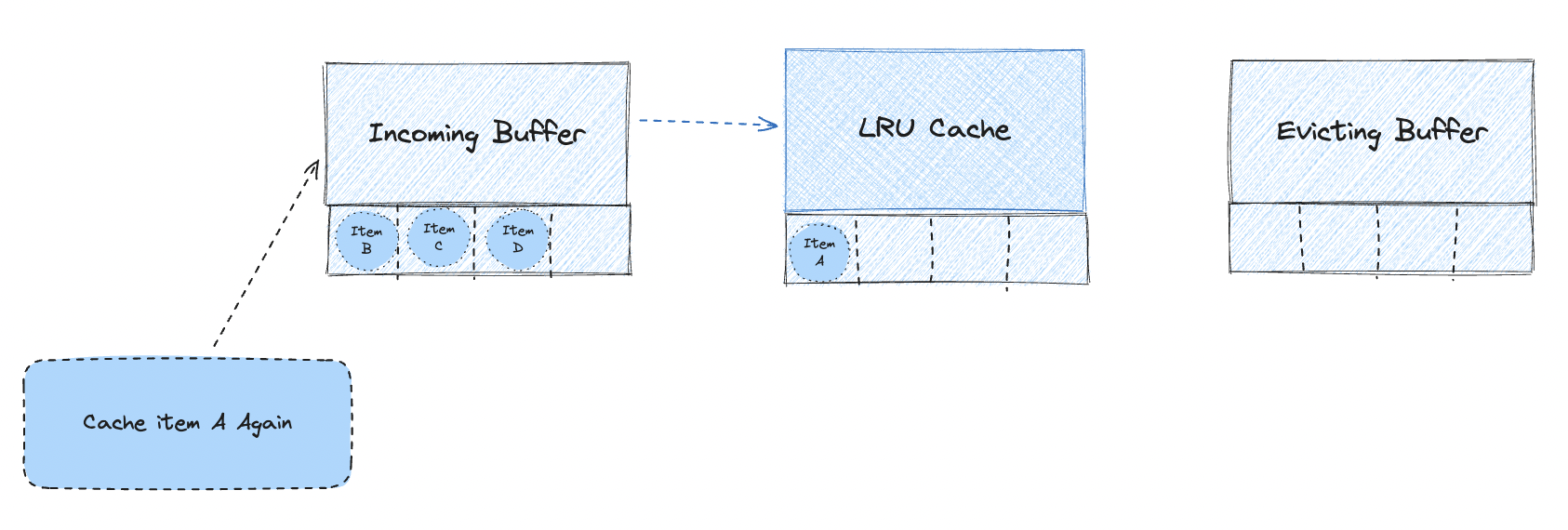

To simplify, 2Q creates an 'incoming' buffer that acts as a filter before the main LRU cache. Any item trying to enter the LRU cache must first pass through this incoming buffer. Only if an item is accessed again will it be promoted from the incoming buffer to the LRU cache.

but what if the buffer is full and we do not see it again and a new entry hits the cache? then it gets removed (technically the last item in the buffer) as that might be data from the Longtail we discussed earlier. this acts as a shield for the cache from having random values we are going to see only once from entering in the first place

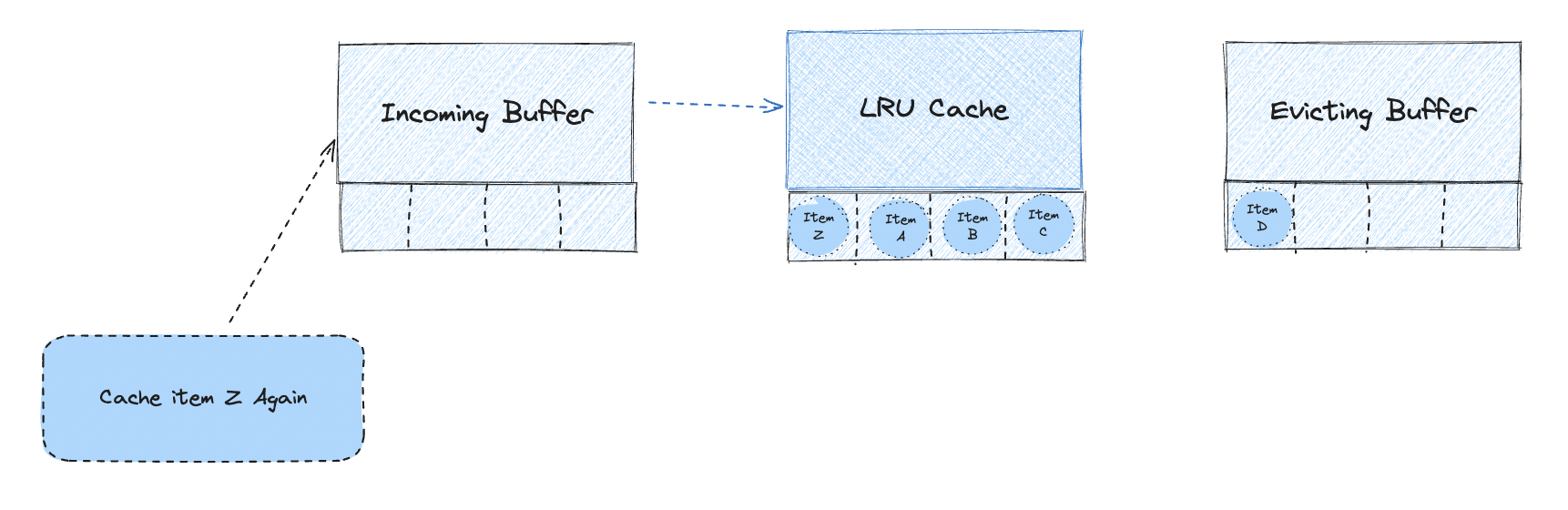

When the LRU cache is full and a new item is promoted from the Incoming buffer we do not evict the item immediately, instead, we move it into another buffer let's call it Evicting buffer, this buffer will keep hold of the item until it's also full and give it like another chance to move back the main cache/LRU cache.

The 2Q algorithm is an enhancement of the LRU Cache algorithm. It introduces two queues: one for pages accessed only once (Am) and another for pages accessed more than once (A1). This distinction allows the algorithm to better manage cache evictions by prioritizing frequently accessed pages while also considering recent access patterns.

Why you should care about this?

If your data follows a neat and predictable normal distribution, LRU might work just fine. But the real world is rarely so tidy! It's full of long-tail scenarios like search queries, e-commerce recommendations, and anything where a few items get tons of attention while the rest remain niche. 2Q helps your cache stay focused on the hits that matter, boosting performance and saving you from unnecessary headaches.

Increasing the cache size or even the TTL can be sometimes helpful, but in some cases, all you might need is to use a buffer like 2Q!

Sources :